Advancing AI Is Alarming but There’s Hope

By Polco on July 23, 2022

Social AI can distort views of reality. Well-designed technology can make it better.

- By Jessie O’Brien -

Our cognitive limits can’t keep pace with the growing complexity of contemporary issues. It’s like cycling down a steep hill and your legs struggle to move as fast as the pedals. Social media and fast-advancing technology are making things worse by facilitating the spread of misinformation.

The outcome is a more polarized and disoriented society that struggles to distinguish the true from the false. Misinformation is particularly harmful to government leaders who must gauge resident sentiment and make decisions for everyone. Decisions that can have lasting effects.

Part of Polco’s mission is to help solve this massive problem with tools designed to rebuild trust and drive an informed and collaborative culture at the local government level.

“We believe well-designed and well-built technology can stem this slide,” Polco CEO Nick Mastronardi said. “We have seen success in this mission in many communities in many states. We now want to bring that success nationwide.”

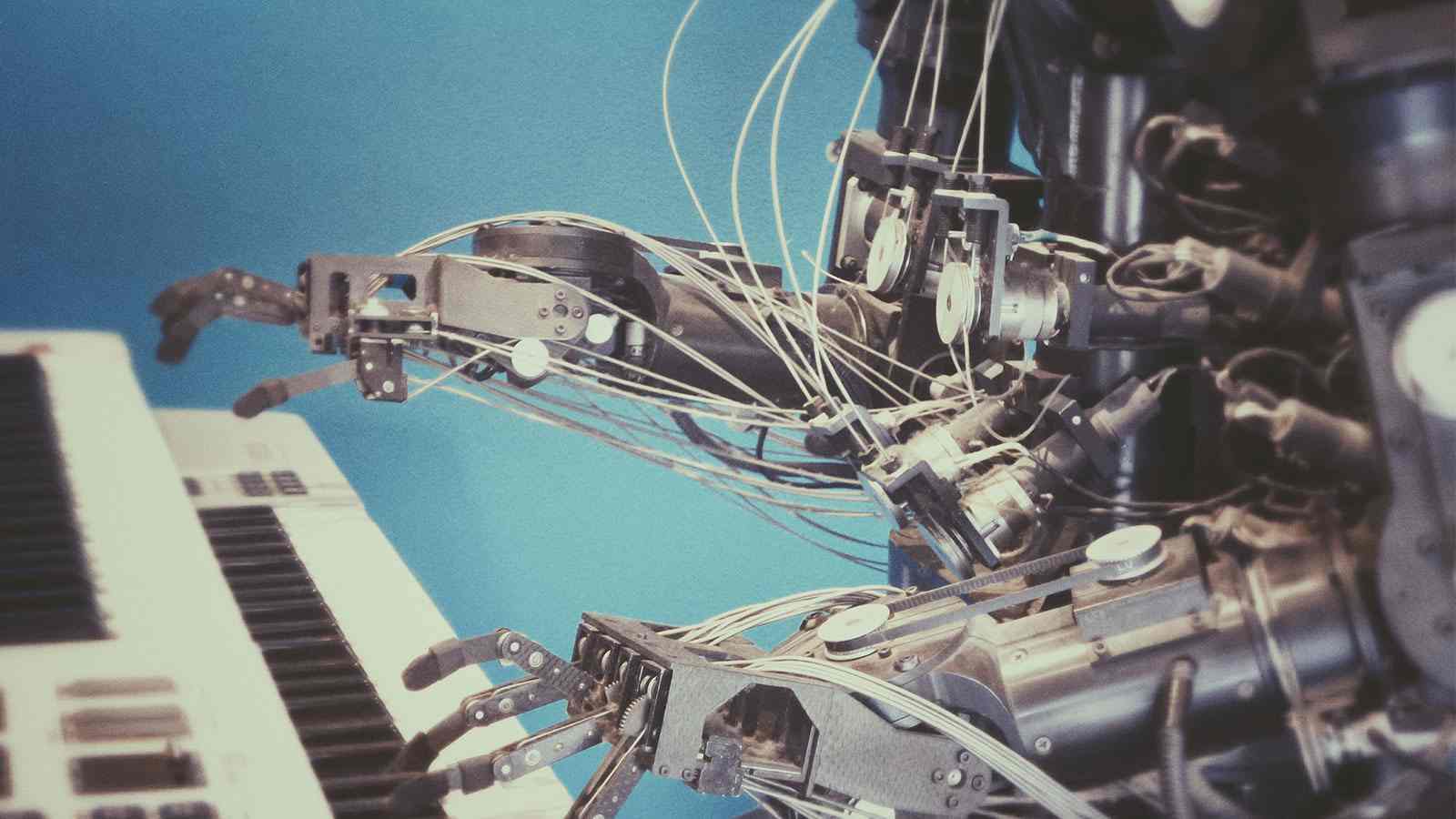

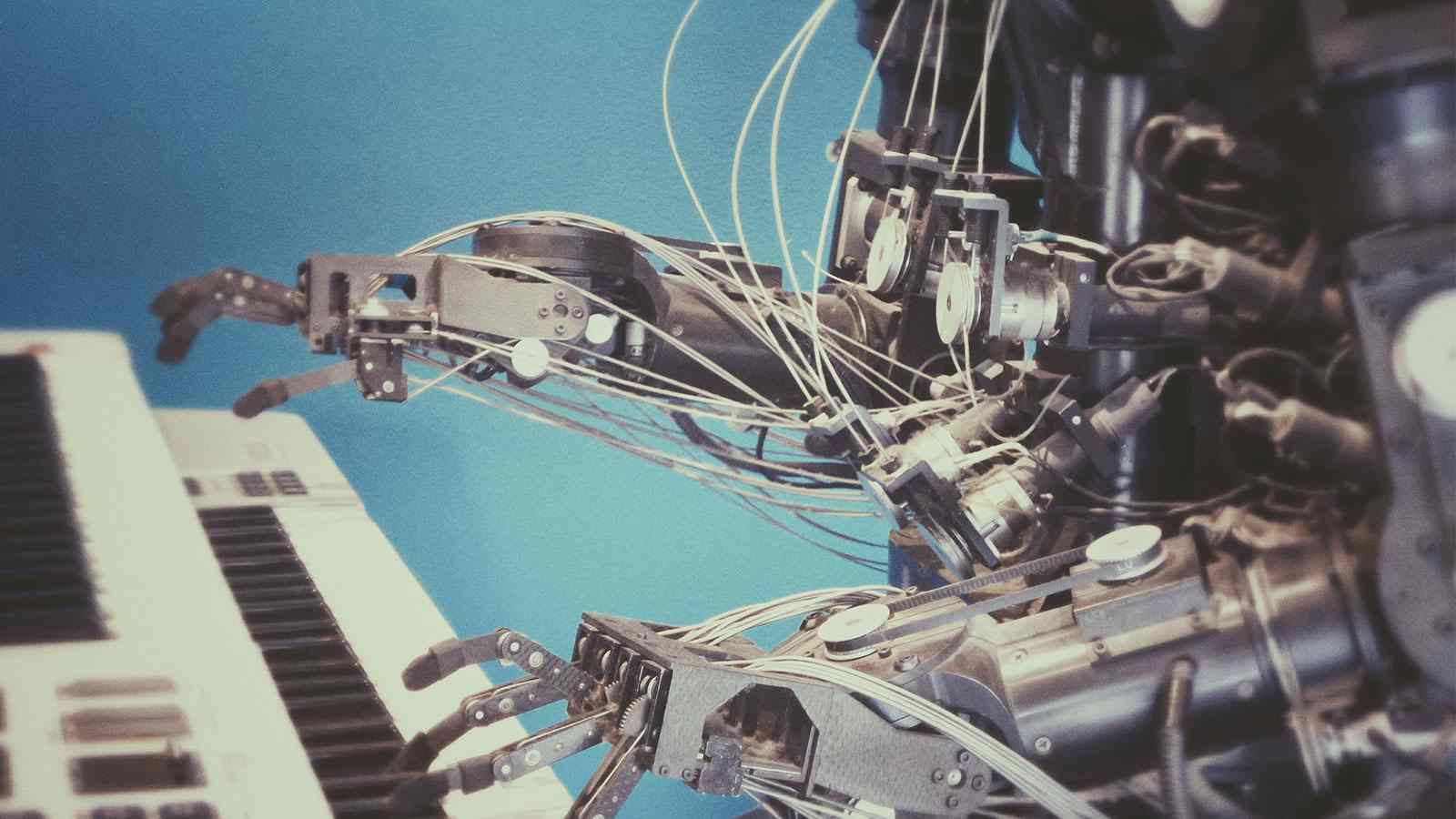

More Human-like AI

But life will likely get weirder before it improves.

AI technology today can generate images that are indistinguishable from their real-life counterparts. Many of us have seen a deep fake Youtube video and shuddered at the uncanny likeness. Nowadays, deep fakes are so realistic that the average eye cannot tell that it's computer generated (like this unsettling deep fake of an already naturally unsettling Tom Cruise).

Other AI programs can generate frighteningly detailed and creative “paintings” with a simple word prompt. These bots can also construct images of realistic scenarios, such as a destructive war zone scene or a political protest riot.

GPT-3 (Generative Pre-trained Transformer 3) is a written word version of creative automation. GPT-3 can write human-like articles based on simple instructions that seem like reported pieces of journalism, abstract prose, or social media posts.

All of this advanced technology has many predicting a future with ominous overtones. AI’s ability to produce more human-like content can easily distort the truth.

An AI generated "painting" using the prompt "online community engagement in the style of Caspar David Friedrich" made on Starry.ai

The Spread of Misinformation

Technology like this wouldn’t be so scary without platforms that can spread content to the masses. We’re already familiar with how this can play out. Misinformation was detrimental to human safety throughout the pandemic. The World Health Organization recently coined the term infodemic, “an overabundance of information, including false or misleading information, in digital and physical environments during an emergency.”

Bots on social media promoted fake and extreme political views in 2016; The top 20 fake articles on Facebook were shared more than the top 20 real articles. Fake articles often play on human emotion, and the more incendiary the content is more likely shared. Yellow journalists of the 1800s knew this. A recent Twitter study revealed such fabrications were 70% more likely retweeted and reached an additional 1,500 people at six times the speed. Imagine how deceptive content can multiply with AI effortlessly writing the articles, making the photos, and sharing the content… all on its own.

Extreme content shared through an algorithm with a goal to obtain as much ad revenue as possible makes a dangerous combination.

Exploiting Human Psychology

These algorithms also play on our natural biases. We seek out information we believe is true, known as confirmation bias. Confirmation bias interferes with our ability to predict the thoughts and feelings of outside groups.

For example, democrats may assume that fewer republicans care about the environment than they actually do. And republicans may assume that fewer democrats care about veterans than is true. Even though we can cognitively know we are misjudging someone else, we still fall trap to the allure of blaming other people as the root of the problem.

Playing into this, people with the most extreme views are often more outspoken on social media, creating a skewed look at overall public opinion. Plus, social media apps are addicting and keep us coming back for more. All of these factors together push the us-versus-them lie and accelerate polarization.

Social Media Platforms Are Not The Place for Civic Discussion

Social media is great for connecting, building an audience, and information sharing. But social platforms have also become the central arena for “civic” discussion. This is problematic due to the abundance of falsehoods, polarization, emotionally subjective content, and the infinity of unfiltered content in general.

“The sheer volume of information that is readily available on the internet without proper context or consideration of what real knowledge, insight, and wisdom is —quantity rarely equates with quality—can lead people to believe they know what they’re talking about. Gaining information in the vacuum of the internet can result in people who don’t know what they don’t know,” wrote Jim Taylor in Psychology Today.

What we see online affects our thinking no matter how discerning a person might be. Government leaders, like anyone else, are susceptible to online pressures. They encounter the same biases, fake stories, and extreme views just like every other person on social media. But government leaders have a responsibility to seek the truth and combat misinformation as their decisions lead to policy that can shape quality of life for everyone.

A Better Alternative

Well-written surveys (taken online or by mail) give officials an untainted view of how their residents think without the distraction of trolls, ads, bias, and false narratives. A representative survey allows residents to have an equal say, no matter how extreme or mild their views.

Tristan Harris, a technology ethicist known for “The Social Dilemma,” said there needs to be a technology overhaul that works with our better angels rather than exploiting our “Paleolithic hardwiring.” That means designing an online environment that respects our human vulnerabilities and counteracts confirmation bias and addictive tendencies.

But until social media platforms have a Big Tobacco moment - say we learn the health effects associated with screen time are no longer worth the likes - social media will likely remain the same. And government leaders will still need a reliable source of community input so they can make informed decisions rooted in reality.

Related Articles

- Wicked Problems and the Truth About Polarization in America

- The Growing Threat to Trust in Local Government

- How to Build an Environment for Civil, Civic Engagement Online

Popular posts

Sign-up for Updates

You May Also Like

These Related Stories

Advancing AI Is Alarming but There’s Hope

.png)

Nine Things To Look For in a Community Engagement Platform